Policy analysts often worry about the possibility of states stifling AI innovation by passing a patchwork of complex and even conflicting legislation. By way of example, SB 1047, a bill in California dictating the development of leading AI models, evoked concerns that a couple dozen Golden State legislators could meaningfully bend the AI development curve without input from the rest of the nation. Governor Gavin Newsom vetoed the legislation, but fears remain that a handful of states could pass legislation with significant extraterritorial effects—altering the pace and nature of AI research and development. Whatever degree of government oversight and regulation, critics argue, ought to be undertaken by Congress.

Much less has been written about the role states can, should, and, in some cases, are playing in fostering AI adoption. In contrast to efforts to regulate the development of AI, efforts to shape and accelerate adoption of AI are neutral or even complementary—one state’s AI incubator program, for example, does not inhibit another state’s effort to do the same (nor the ability of the federal government to follow suit). This is the sweet spot for state involvement—not dictating AI development but rather shaping its diffusion.

A brief counterfactual analysis confirms the utility of this division of regulatory authority. Consider how state-by-state regulation of internal combustion engine development might have unfolded in the last century. Michigan might have established minimal restrictions, Tennessee could have implemented specific safety protocols, and Kansas might have required certain environmental standards. This fragmented approach would have made the Model T, for instance, more accessible in some regions than others. Manufacturers likely would have avoided certain jurisdictions where compliance costs were prohibitively high. Affluent Americans could have circumvented these inconsistencies by purchasing vehicles from less regulated states. However, most citizens would have remained in the past—traveling by horse while their rich neighbors honked at them—longer than necessary. The conclusion is clear: states should not control the development of innovative technologies because such decisions require national deliberation. Nevertheless, states fulfill their proper regulatory function by implementing policies that reflect their residents preferences regarding how rapidly these technologies should be adopted within their boundaries.

Comparison of AI proposals currently pending before state legislatures helps clarify this critical difference between regulating the development of a general purpose technology and shaping its adoption. The New York Artificial Intelligence Consumer Protection Act, introduced in the state assembly and senate, serves as an example of the sort of regulation that might alter the trajectory of AI development. Among numerous other provisions, the bill would require AI developers to document how they plan to mitigate harms posed by their systems subject to an audit by the state attorney general, create a risk management policy, and document several technical aspects of their development process.

Imagine this act multiplied by 50. Developers may find themselves in an endless compliance maze. One day they would bend to the expectations of New York’s attorney general, then the whims of Washington’s Department of Commerce the next, and finally Idaho’s AI Regulation Panel a week or so later. This task would be made all the more difficult given that states already struggle to agree on basic regulatory concepts, such as how to define artificial intelligence.

Large AI firms could likely handle these burdens, but startups may flounder. The net result is a less competitive AI ecosystem. This reality may partially explain why Apple, Alphabet, Microsoft, and Meta have swallowed up a number of AI companies over the past ten years—founders looking at the regulatory horizon likely realize that it is easier to exit than wait around for 50 auditors to come kick their tires.

We’ve seen this dynamic play out over a longer term in a related context. Researchers at the Information Technology and Innovation Foundation calculated that the patchwork approach to privacy laws could impose more than $1 trillion in compliance costs over a decade, with small businesses bearing a fifth of those costs. There’s no reason to emulate this pattern in the AI context.

Congress should establish clear, preemptive guidelines for AI development while empowering states to implement adoption strategies that reflect their unique circumstances and values.

States should instead stay in their regulatory lane, implementing community preferences for local technology adoption rather than dictating broader terms of technological development. Utah stands out as an example. The Utah Office of AI Policy does not impose any regulations with extraterritorial ramifications. Instead, it invites AI companies to partner with the state to develop a bespoke regulatory agreement. Any AI entity that serves Utah customers may work with the Office to develop an agreement that may include regulatory exemptions, capped penalties, cure periods to remedy any alleged violations of applicable regulations, and specifications as to which regulations do apply and how.

This variant of a regulatory sandbox avoids the potential overreach of a one-size-fits-all regulation, while still affording Utahns a meaningful opportunity to accelerate the diffusion of AI tools across their state. What’s more, this scheme avoids the pitfalls of SB 1047 look-alikes because it does not pertain to the development of the technology, just its application. This dynamic regulatory approach allows the State to deliberately think through how and when it wants to help spread certain AI tools. The Spark Act, pending before the Washington State Legislature, likewise exemplifies an adoption-oriented bill. If passed, Washington would partner with private AI companies to oversee the creation of AI tools targeting pressing matters of public policy, such as the detection of wildfires.

States can serve as laboratories for innovation by deliberately incorporating AI into their own operations. Take Maryland’s plans to rely on AI in managing its road network. Rather than mandating private adoption, Maryland demonstrates the technologys utility by using it to identify common bottlenecks, propose new traffic flows, and generally help residents get from A to B in a safer and faster manner. This approach allows residents to witness AIs practical benefits before deciding whether to embrace similar tools in their businesses or communities. This example reveals how states can shape adoption through demonstration rather than dictation—creating a pull rather than push dynamic.

States also play a crucial role when it comes to preparing their residents for a novel technological wave. Oklahoma’s partnership with Google to provide 10,000 residents with AI training will reduce the odds of residents fearing AI and instead train them to harness it. By ensuring diverse participation in the AI economy, Oklahoma may avoid the pitfalls of previous technological transformations that exacerbated existing inequalities—with some communities experiencing a brighter future several years, if not decades, before their neighbors. This program speaks to another instance in which states can shape AI adoption without dictating AI development. Massachusetts offers up yet another example. Its AI Hub promises to empower residents to thrive in the Age of AI via workforce training opportunities.

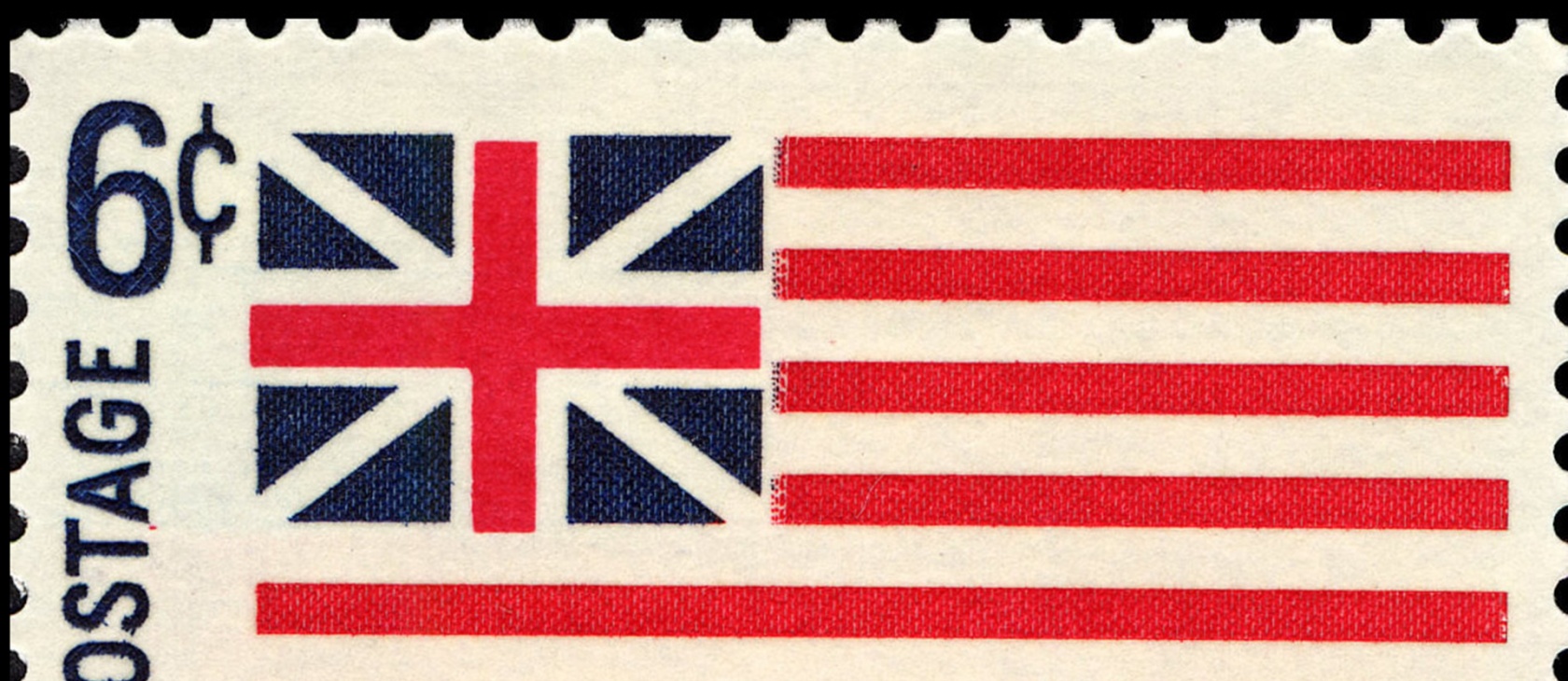

These positive examples prove that the federalist system, with its distinct spheres of authority, offers a compelling framework for AI governance. Just as our Founders envisioned a division between national and local concerns, so too should we partition responsibility for AI. Development requires the uniform hand of federal oversight, while adoption benefits from the diverse approaches that emerge from state-level experimentation. This distinction serves both innovation and democracy by allowing breakthrough technologies to emerge under consistent national standards while preserving communities right to determine how quickly these innovations reshape their daily lives.

Though some communities may wish to avoid the turbulence associated with incorporating any new technology—let alone one as novel as AI—into their economies, cultures, and systems, that choice is likely off the table. More than 40 percent of the working age population already uses AI to some degree. Of that user base, 33 percent use AI nearly every day. That figure will likely increase as AI tools continue to advance and address an ever-greater set of tasks. Americans may also find that AI literacy—knowing how to use AI tools as well as the risks and benefits of those tools—is an economic necessity. Fortune 500 companies have leaned hard into AI and have expressed an interest in hiring AI-savvy employees. Though this seeming inevitability makes it appear as though AI is a force beyond our control, states remain the actors best suited to directing AI toward the common good (defined by that state’s community) while leaving others to do the same.

In sum, the coming decade will likely witness an acceleration of AI capabilities that rivals or exceeds the rapid diffusion of the Internet in the 1990s. Then, as now, the key question is not whether to adopt these technologies but how to do so in a manner that respects community values while maximizing benefits. States that thoughtfully shape adoption—creating regulatory sandboxes, demonstrating practical applications, addressing equity concerns, and building human capital—will likely see their residents thrive in this new era. Those that overreach into development questions may unintentionally hamper innovation, while those that neglect adoption entirely risk watching from the sidelines as the future unfolds without them.

The path forward requires respecting this division of regulatory labor. Congress should establish clear, preemptive guidelines for AI development while empowering states to implement adoption strategies that reflect their unique circumstances and values. This balanced approach preserves both technological momentum and democratic choice. It would ensure that Americans collectively shape AI rather than merely being shaped by it.